1. 环境准备 1.1 安装规划

角色

IP

组件

k8s-master1

192.168.80.45

etcd, api-server, controller-manager, scheduler, docker

k8s-node01

192.168.80.46

etcd, kubelet, kube-proxy, docker

k8s-node02

192.168.80.47

etcd, kubelet, kube-proxy, docker

软件版本:

软件

版本

备注

OS

Ubuntu 16.04.6 LTS

Kubernetes

1.21.4

Etcd

v3.5.0

Docker

19.03.9

1.2 系统设置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 hostnamectl set-hostname k8s-master hostnamectl set-hostname k8s-node01 hostnamectl set-hostname k8s-node02 cat >> /etc/hosts <<EOF 192.168.80.45 k8s-master 192.168.80.46 k8s-node01 192.168.80.47 k8s-node02 EOF swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system apt install ntpdate -y ntpdate ntp1.aliyun.com crontab -e */30 * * * * /usr/sbin/ntpdate-u ntp1.aliyun.com >> /var/log /ntpdate.log 2>&1

2. 安装 docker 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz tar zxvf docker-19.03.9.tgz mv docker/* /usr/bin docker version cat > /lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP \$MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start docker systemctl status docker systemctl enable docker

3. TLS 证书 3.1 证书工具 1 2 3 4 5 6 7 8 9 10 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64 wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64 wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64 mv cfssl_1.5.0_linux_amd64 /usr/local /bin/cfssl mv cfssljson_1.5.0_linux_amd64 /usr/local /bin/cfssljson mv cfssl-certinfo_1.5.0_linux_amd64 /usr/bin/cfssl-certinfo chmod /usr/local /bin/cfssl*

3.2 证书归类 生成的 CA 证书和秘钥文件如下:

组件

证书

密钥

备注

etcd

ca.pem、etcd.pem

etcd-key.pem

apiserver

ca.pem、apiserver.pem

apiserver-key.pem

controller-manager

ca.pem、kube-controller-manager.pem

ca-key.pem、kube-controller-manager-key.pem

kubeconfig

scheduler

ca.pem、kube-scheduler.pem

kube-scheduler-key.pem

kubeconfig

kubelet

ca.pem

kubeconfig+token

kube-proxy

ca.pem、kube-proxy.pem

kube-proxy-key.pem

kubeconfig

kubectl

ca.pem、admin.pem

admin-key.pem

3.3 CA 证书 CA: Certificate Authority

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 mkdir -p /root/ssl && cd /root/ssl cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "87600h" } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ], "ca": { "expiry": "87600h" } } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca ls ca* ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

3.4 etcd 证书 注意:hosts 中的IP地址,分别指定了 etcd 集群的主机 IP

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "localhost", "192.168.80.45", "192.168.80.46", "192.168.80.47" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "etcd", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

3.5 kube-apiserver 证书 注意:hosts 中的IP地址,分别指定了 kubernetes master 集群的主机 IP 和 kubernetes 服务的服务 IPkube-apiserver 指定的 service-cluster-ip-range 网段的第一个IP,如 10.96.0.1)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 cat > kube-apiserver-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "127.0.0.1", "localhost", "192.168.80.1", "192.168.80.2", "192.168.80.45", "192.168.80.46", "192.168.80.47", "10.96.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

3.6 kube-controller-manager 证书 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > kube-controller-manager-csr.json <<EOF { "CN": "system:kube-controller-manager", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

3.8 kube-scheduler 证书 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > kube-scheduler-csr.json << EOF { "CN": "system:kube-scheduler", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

3.9 admin 证书

后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权;

kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API 的权限;O 指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的 system:masters,所以被授予访问所有 API 的权限;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin ls admin* admin.csr admin-csr.json admin-key.pem admin.pem

搭建完 kubernetes 集群后,可以通过命令: kubectl get clusterrolebinding cluster-admin -o yaml ,查看到 clusterrolebinding cluster-admin 的 subjects 的 kind 是 Group,name 是 system:masters。 roleRef 对象是 ClusterRole cluster-admin。 即 system:masters Group 的 user 或者 serviceAccount 都拥有 cluster-admin 的角色。 因此在使用 kubectl 命令时候,才拥有整个集群的管理权限。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 kubectl get clusterrolebinding cluster-admin -o yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" creationTimestamp: 2017-04-11T11:20:42Z labels: kubernetes.io/bootstrapping: rbac-defaults name: cluster-admin resourceVersion: "52" selfLink: /apis/rbac.authorization.k8s.io/v1/clusterrolebindings/cluster-admin uid: e61b97b2-1ea8-11e7-8cd7-f4e9d49f8ed0 roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:masters

3.10 kube-proxy 证书

CN 指定该证书的 User 为 system:kube-proxy;

kube-apiserver 预定义的 RoleBinding system:node-proxier 将User system:kube-proxy 与 Role system:node-proxier 绑定,该 Role 授予了调用 kube-apiserver Proxy 相关 API 的权限;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

3.11 证书信息 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 cfssl-certinfo -cert apiserver.pem { "subject" : { "common_name" : "kubernetes" , "country" : "CN" , "organization" : "k8s" , "organizational_unit" : "System" , "locality" : "BeiJing" , "province" : "BeiJing" , "names" : [ "CN" , "BeiJing" , "BeiJing" , "k8s" , "System" , "kubernetes" ] }, "issuer" : { "common_name" : "kubernetes" , "country" : "CN" , "organization" : "k8s" , "organizational_unit" : "System" , "locality" : "BeiJing" , "province" : "BeiJing" , "names" : [ "CN" , "BeiJing" , "BeiJing" , "k8s" , "System" , "kubernetes" ] }, "serial_number" : "275867496157961939649344217740970264800633176866" , "sans" : [ "localhost" , "kubernetes" , "kubernetes.default" , "kubernetes.default.svc" , "kubernetes.default.svc.cluster" , "kubernetes.default.svc.cluster.local" , "127.0.0.1" , "192.168.80.1" , "192.168.80.2" , "192.168.80.45" , "192.168.80.46" , "192.168.80.47" , "10.96.0.1" ], "not_before" : "2021-06-09T05:20:00Z" , "not_after" : "2031-06-07T05:20:00Z" , "sigalg" : "SHA256WithRSA" , "authority_key_id" : "" , "subject_key_id" : "E3:84:0F:9C:00:07:4A:8F:5C:B2:35:45:A0:50:4D:3E:9D:C0:B4:D0" , "pem" : "-----BEGIN CERTIFICATE-----\nMIIEezCCA2OgAwIBAgIUMFJTjEXe9sDDDpPXcAiUBt5+QyIwDQYJKoZIhvcNAQEL\nBQAwZTELMAkGA1UEBhMCQ04xEDAOBgNVBAgTB0JlaUppbmcxEDAOBgNVBAcTB0Jl\naUppbmcxDDAKBgNVBAoTA2s4czEPMA0GA1UECxMGU3lzdGVtMRMwEQYDVQQDEwpr\ndWJlcm5ldGVzMB4XDTIxMDYwOTA1MjAwMFoXDTMxMDYwNzA1MjAwMFowZTELMAkG\nA1UEBhMCQ04xEDAOBgNVBAgTB0JlaUppbmcxEDAOBgNVBAcTB0JlaUppbmcxDDAK\nBgNVBAoTA2s4czEPMA0GA1UECxMGU3lzdGVtMRMwEQYDVQQDEwprdWJlcm5ldGVz\nMIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAw0BpjZQNEd6Oqu8ubEWG\nhbdwJecOTCfdbY+VLIKEm0Tys8ZBlu7OrtZ8Rj5OAZTXil0ZJz+hvHo8YTNJJ16g\njHV88VSpfoXD5DE59PITSFwfY1lWHVctC3Ddo9CM9cU9Ty+Kf29XcrLbc/VNGZTB\ncvKXoM3b6NkBKOdKphVjUvafhKC6ls2ac5uub3uqZTpPgBs/1PvINKNZkP5U6lUV\noTBMAT+qbQ9aggA+bA+WegL3jHU78ngo1XMnsb1HfAjwKDOf66smNJ/K+YjD+Cul\ngjpyqOQKGlz5xqXUcBgIMO9djI4f5hvaMsSje1aSJ/oh5AfQbxQsGjajlS80ED08\nxwIDAQABo4IBITCCAR0wDgYDVR0PAQH/BAQDAgWgMB0GA1UdJQQWMBQGCCsGAQUF\nBwMBBggrBgEFBQcDAjAMBgNVHRMBAf8EAjAAMB0GA1UdDgQWBBTjhA+cAAdKj1yy\nNUWgUE0+ncC00DCBvgYDVR0RBIG2MIGzgglsb2NhbGhvc3SCCmt1YmVybmV0ZXOC\nEmt1YmVybmV0ZXMuZGVmYXVsdIIWa3ViZXJuZXRlcy5kZWZhdWx0LnN2Y4Iea3Vi\nZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVygiRrdWJlcm5ldGVzLmRlZmF1bHQu\nc3ZjLmNsdXN0ZXIubG9jYWyHBH8AAAGHBMCoUAGHBMCoUAKHBMCoUC2HBMCoUC6H\nBMCoUC+HBAr+AAEwDQYJKoZIhvcNAQELBQADggEBAG+RUKp4cxz4EOqmAPiczkl2\nHciAg01RbCavoLoUWmoDDAQf7PIhQF2pLewFCwR5w6SwvCJAVdg+eHdefJ2MBtJr\nKQgbmEOBXd4Z5ZqBeSP6ViHvb1pKtRSldznZLfxjsVd0bN3na/JmS4TZ90SqLLtL\nN4CgGfTs2AfrtbtWIqewDMS9aWjBK8VePzLBmsdLddD4WYQOnl+QjdrX9bbqYRCG\nQo3CKvJ3JZqh6AJHcgKsm0702uMU/TCJwe1M8I8SpYrwA74uCBy3O9jXed1rZlrp\nRVURB6Ro7SMLjiadTJyf6AbLPMmZcPKHhZ1XG07q8Od2Kd+KVx1PxF3et6OOteE=\n-----END CERTIFICATE-----\n" }

3.12 分发证书 1 2 mkdir -p /etc/kubernetes/pki cp *.pem /etc/kubernetes/pki

4. 安装 etcd (多节点) 4.1 节点 etcd-1 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install wget https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz tar zxvf etcd-v3.5.0-linux-amd64.tar.gz mv etcd-v3.5.0-linux-amd64/{etcd,etcdctl} /usr/bin/ mkdir -p /etc/etcd cat > /etc/etcd/etcd.conf.yml << EOF # This is the configuration file for the etcd server. # Human-readable name for this member. name: 'etcd-1' # Path to the data directory. data-dir: /var/lib/etcd/default.etcd # Path to the dedicated wal directory. wal-dir: # Number of committed transactions to trigger a snapshot to disk. snapshot-count: 10000 # Time (in milliseconds) of a heartbeat interval. heartbeat-interval: 100 # Time (in milliseconds) for an election to timeout. election-timeout: 1000 # Raise alarms when backend size exceeds the given quota. 0 means use the # default quota. quota-backend-bytes: 0 # List of comma separated URLs to listen on for peer traffic. listen-peer-urls: 'https://localhost:2380,https://192.168.80.45:2380' # List of comma separated URLs to listen on for client traffic. listen-client-urls: 'https://localhost:2379,https://192.168.80.45:2379' # Maximum number of snapshot files to retain (0 is unlimited). max-snapshots: 5 # Maximum number of wal files to retain (0 is unlimited). max-wals: 5 # Comma-separated white list of origins for CORS (cross-origin resource sharing). cors: # List of this member's peer URLs to advertise to the rest of the cluster. # The URLs needed to be a comma-separated list. initial-advertise-peer-urls: 'https://localhost:2380,https://192.168.80.45:2380' # List of this member's client URLs to advertise to the public. # The URLs needed to be a comma-separated list. advertise-client-urls: 'https://localhost:2379,https://192.168.80.45:2379' # Discovery URL used to bootstrap the cluster. discovery: # Valid values include 'exit', 'proxy' discovery-fallback: 'proxy' # HTTP proxy to use for traffic to discovery service. discovery-proxy: # DNS domain used to bootstrap initial cluster. discovery-srv: # Initial cluster configuration for bootstrapping. initial-cluster: 'etcd-1=https://192.168.80.45:2380,etcd-2=https://192.168.80.46:2380,etcd-3=https://192.168.80.47:2380' # Initial cluster token for the etcd cluster during bootstrap. initial-cluster-token: 'etcd-cluster' # Initial cluster state ('new' or 'existing'). initial-cluster-state: 'new' # Reject reconfiguration requests that would cause quorum loss. strict-reconfig-check: false # Accept etcd V2 client requests enable-v2: true # Enable runtime profiling data via HTTP server enable-pprof: true # Valid values include 'on', 'readonly', 'off' proxy: 'off' # Time (in milliseconds) an endpoint will be held in a failed state. proxy-failure-wait: 5000 # Time (in milliseconds) of the endpoints refresh interval. proxy-refresh-interval: 30000 # Time (in milliseconds) for a dial to timeout. proxy-dial-timeout: 1000 # Time (in milliseconds) for a write to timeout. proxy-write-timeout: 5000 # Time (in milliseconds) for a read to timeout. proxy-read-timeout: 0 client-transport-security: # Path to the client server TLS cert file. cert-file: /etc/kubernetes/pki/etcd.pem # Path to the client server TLS key file. key-file: /etc/kubernetes/pki/etcd-key.pem # Enable client cert authentication. client-cert-auth: true # Path to the client server TLS trusted CA cert file. trusted-ca-file: /etc/kubernetes/pki/ca.pem # Client TLS using generated certificates auto-tls: true peer-transport-security: # Path to the peer server TLS cert file. cert-file: /etc/kubernetes/pki/etcd.pem # Path to the peer server TLS key file. key-file: /etc/kubernetes/pki/etcd-key.pem # Enable peer client cert authentication. client-cert-auth: true # Path to the peer server TLS trusted CA cert file. trusted-ca-file: /etc/kubernetes/pki/ca.pem # Peer TLS using generated certificates. auto-tls: true # Enable debug-level logging for etcd. log-level: debug logger: zap # Specify 'stdout' or 'stderr' to skip journald logging even when running under systemd. log-outputs: [stderr] # Force to create a new one member cluster. force-new-cluster: false auto-compaction-mode: periodic auto-compaction-retention: "1" EOF cat > /lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network-online.target Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/etcd --config-file=/etc/etcd/etcd.conf.yml Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

4.2 其他节点 1 2 3 4 5 6 sudo -i cd / && mv /home/ubuntu/etcd-clone.tar / && tar xvf etcd-clone.tar && rm -f etcd-clone.tarvi /etc/etcd/etcd.conf.yml

4.3 启动 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 systemctl daemon-reload systemctl start etcd systemctl status etcd systemctl enable etcd etcdctl member list --cacert=/etc/kubernetes/pki/ca.pem --cert=/etc/kubernetes/pki/etcd.pem --key=/etc/kubernetes/pki/etcd-key.pem --write-out=table +------------------+---------+--------+----------------------------+----------------------------+------------+ | ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER | +------------------+---------+--------+----------------------------+----------------------------+------------+ | 46bc5ad35e418584 | started | etcd-1 | https://192.168.80.45:2380 | https://192.168.80.45:2379 | false | | 8f347c1327049bc8 | started | etcd-3 | https://192.168.80.47:2380 | https://192.168.80.47:2379 | false | | b01e7a29099f3eb8 | started | etcd-2 | https://192.168.80.46:2380 | https://192.168.80.46:2379 | false | +------------------+---------+--------+----------------------------+----------------------------+------------+ etcdctl endpoint health --cacert=/etc/kubernetes/pki/ca.pem --cert=/etc/kubernetes/pki/etcd.pem --key=/etc/kubernetes/pki/etcd-key.pem --cluster --write-out=table +----------------------------+--------+-------------+-------+ | ENDPOINT | HEALTH | TOOK | ERROR | +----------------------------+--------+-------------+-------+ | https://192.168.80.47:2379 | true | 20.973639ms | | | https://192.168.80.46:2379 | true | 29.842299ms | | | https://192.168.80.45:2379 | true | 30.564766ms | | +----------------------------+--------+-------------+-------+

4. 安装 etcd (单节点) 4.1 节点 etcd-1 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install wget https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz tar zxvf etcd-v3.5.0-linux-amd64.tar.gz mv etcd-v3.5.0-linux-amd64/{etcd,etcdctl} /usr/bin/ mkdir -p /etc/etcd cat > /etc/etcd/etcd.conf.yml << EOF name: 'etcd-1' data-dir: /var/lib/etcd/default.etcd wal-dir: snapshot-count: 10000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://localhost:2380,https://192.168.80.45:2380' listen-client-urls: 'https://localhost:2379,https://192.168.80.45:2379' max-snapshots: 5 max-wals: 5 cors: initial-advertise-peer-urls: 'https://localhost:2380,https://192.168.80.45:2380' advertise-client-urls: 'https://localhost:2379,https://192.168.80.45:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: /etc/kubernetes/pki/etcd.pem key-file: /etc/kubernetes/pki/etcd-key.pem client-cert-auth: true trusted-ca-file: /etc/kubernetes/pki/ca.pem auto-tls: true peer-transport-security: cert-file: /etc/kubernetes/pki/etcd.pem key-file: /etc/kubernetes/pki/etcd-key.pem client-cert-auth: true trusted-ca-file: /etc/kubernetes/pki/ca.pem auto-tls: true log-level: debug logger: zap log-outputs: [stderr] force-new-cluster: false auto-compaction-mode: periodic auto-compaction-retention: "1" EOF cat > /lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network-online.target Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/etcd --config-file=/etc/etcd/etcd.conf.yml Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start etcd systemctl status etcd systemctl enable etcd etcdctl member list --cacert=/etc/kubernetes/pki/ca.pem --cert=/etc/kubernetes/pki/etcd.pem --key=/etc/kubernetes/pki/etcd-key.pem --write-out=table etcdctl endpoint health --cacert=/etc/kubernetes/pki/ca.pem --cert=/etc/kubernetes/pki/etcd.pem --key=/etc/kubernetes/pki/etcd-key.pem --cluster --write-out=table

5. Master 节点 kubernetes master 节点组件:

kube-apiserver

kube-scheduler

kube-controller-manager

kubelet (非必须,但必要)

kube-proxy(非必须,但必要)

5.1 安装准备 https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.21.md

1 2 3 4 5 6 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install wget https://dl.k8s.io/v1.21.4/kubernetes-server-linux-amd64.tar.gz tar zxvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bincp kube-apiserver kube-scheduler kube-controller-manager kubectl kubelet kube-proxy /usr/bin

5.2 apiserver 5.2.1 TLS Bootstrapping Token 启用 TLS Bootstrapping 机制:

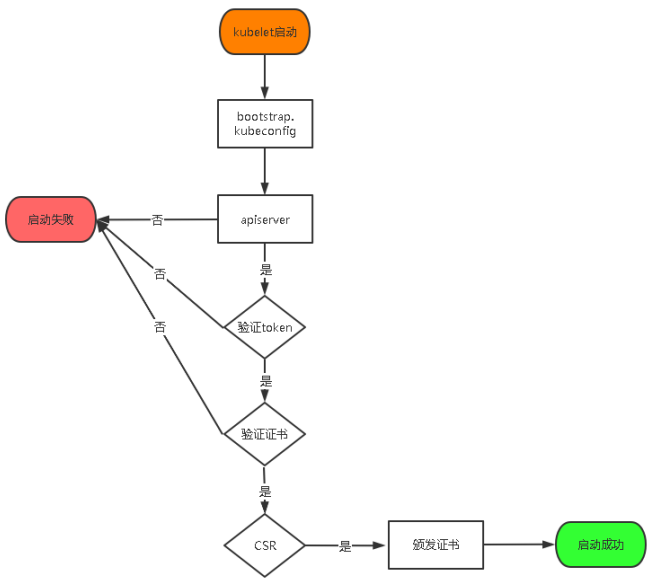

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

TLS bootstraping 工作流程:

1 2 3 4 5 6 BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ' ) cat > /etc/kubernetes/token.csv <<EOF ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:node-bootstrapper" EOF

5.2.2 开机启动 --service-cluster-ip-range=10.96.0.0/16: Service IP 段

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.80.45 \ --secure-port=6443 \ --advertise-address=192.168.80.45 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/etc/kubernetes/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/etc/kubernetes/pki/kube-apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/kube-apiserver-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/kube-apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/kube-apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/ca-key.pem \ --service-account-signing-key-file=/etc/kubernetes/pki/ca-key.pem \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --etcd-cafile=/etc/kubernetes/pki/ca.pem \ --etcd-certfile=/etc/kubernetes/pki/etcd.pem \ --etcd-keyfile=/etc/kubernetes/pki/etcd-key.pem \ --etcd-servers=https://192.168.80.45:2379 \ --allow-privileged=true \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kubernetes/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2" cat > /lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start kube-apiserver systemctl status kube-apiserver systemctl enable kube-apiserver

5.2.3 kubectl 管理集群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 mkdir -p /root/.kube KUBE_CONFIG=/root/.kube/config KUBE_APISERVER="https://192.168.80.45:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-credentials cluster-admin \ --client-certificate=/etc/kubernetes/pki/admin.pem \ --client-key=/etc/kubernetes/pki/admin-key.pem \ --embed-certs=true \ --kubeconfig=${KUBE_CONFIG} kubectl config set-context default \ --cluster=kubernetes \ --user=cluster-admin \ --kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

5.2.4 授权 kubelet-bootstrap 用户允许请求证书 防止错误:failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "kubelet-bootstrap" cannot create resource "certificatesigningrequests" in API group "certificates.k8s.io" at the cluster scope

1 2 3 kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap

5.2.5 授权 apiserver 访问 kubelet 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install cat > apiserver-to-kubelet-rbac.yaml << EOF apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:kube-apiserver-to-kubelet rules: - apiGroups: - "" resources: - nodes/proxy - nodes/stats - nodes/log - nodes/spec - nodes/metrics - pods/log verbs: - "*" --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:kube-apiserver namespace: "" roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:kube-apiserver-to-kubelet subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: kubernetes EOF kubectl apply -f apiserver-to-kubelet-rbac.yaml

5.3 controller-manager 5.3.1 kubeconfig 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 KUBE_CONFIG="/etc/kubernetes/kube-controller-manager.kubeconfig" KUBE_APISERVER="https://192.168.80.45:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-controller-manager \ --client-certificate=/etc/kubernetes/pki/kube-controller-manager.pem \ --client-key=/etc/kubernetes/pki/kube-controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=${KUBE_CONFIG} kubectl config set-context default \ --cluster=kubernetes \ --user=kube-controller-manager \ --kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

5.3.2 开机启动 --cluster-cidr=10.244.0.0/16: Pod IP 段

--service-cluster-ip-range=10.96.0.0/16: Service IP 段

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/var/log/kubernetes \ --leader-elect=true \ --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \ --bind-address=127.0.0.1 \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --service-cluster-ip-range=10.96.0.0/16 \ --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \ --root-ca-file=/etc/kubernetes/pki/ca.pem \ --service-account-private-key-file=/etc/kubernetes/pki/ca-key.pem \ --cluster-signing-duration=87600h0m0s" cat > /lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start kube-controller-manager systemctl status kube-controller-manager systemctl enable kube-controller-manager

5.4 scheduler 5.4.1 kubeconfig 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 KUBE_CONFIG="/etc/kubernetes/kube-scheduler.kubeconfig" KUBE_APISERVER="https://192.168.80.45:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-scheduler \ --client-certificate=/etc/kubernetes/pki/kube-scheduler.pem \ --client-key=/etc/kubernetes/pki/kube-scheduler-key.pem \ --embed-certs=true \ --kubeconfig=${KUBE_CONFIG} kubectl config set-context default \ --cluster=kubernetes \ --user=kube-scheduler \ --kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

5.4.2 开机启动 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 KUBE_SCHEDULER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/var/log/kubernetes \ --leader-elect \ --kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \ --bind-address=127.0.0.1" cat > /lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start kube-scheduler systemctl status kube-scheduler systemctl enable kube-scheduler

5.5 kubelet 5.5.1 参数配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 cat > /etc/kubernetes/kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - 10.96.0.2 clusterDomain: cluster.local failSwapOn: false authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /etc/kubernetes/pki/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% maxOpenFiles: 1000000 maxPods: 110 EOF

5.5.2 kubeconfig 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 BOOTSTRAP_TOKEN=$(cat /etc/kubernetes/token.csv | awk -F, '{print $1}' ) KUBE_CONFIG="/etc/kubernetes/bootstrap.kubeconfig" KUBE_APISERVER="https://192.168.80.45:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-credentials "kubelet-bootstrap" \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-context default \ --cluster=kubernetes \ --user="kubelet-bootstrap" \ --kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

5.5.3 开机启动 其中:--kubeconfig=/etc/kubernetes/kubelet.kubeconfig 在加入集群时自动生成

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 KUBELET_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/var/log/kubernetes \ --hostname-override=k8s-master1 \ --network-plugin=cni \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \ --config=/etc/kubernetes/kubelet-config.yml \ --cert-dir=/etc/kubernetes/pki \ --pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.1" cat > /lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] ExecStart=/usr/bin/kubelet $KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start kubelet systemctl status kubelet systemctl enable kubelet

5.5.4 加入集群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-ghWG-AWFM9sxJbr5A-BIq9puVIRxfFHrQlwDjYbHba8 25s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending kubectl certificate approve node-csr-ghWG-AWFM9sxJbr5A-BIq9puVIRxfFHrQlwDjYbHba8 kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-ghWG-AWFM9sxJbr5A-BIq9puVIRxfFHrQlwDjYbHba8 53m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued kubectl get node NAME STATUS ROLES AGE VERSION k8s-master1 NotReady <none> 4m8s v1.21.4

5.6 kube-proxy 5.6.1 参数配置 clusterCIDR: 10.96.0.0/16: Service IP 段,与apiserver & controller-manager 的--service-cluster-ip-range 一致

1 2 3 4 5 6 7 8 9 10 cat > /etc/kubernetes/kube-proxy-config.yml << EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 metricsBindAddress: 0.0.0.0:10249 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig hostnameOverride: k8s-master1 clusterCIDR: 10.96.0.0/16 EOF

5.6.2 kubeconfig 文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 KUBE_CONFIG="/etc/kubernetes/kube-proxy.kubeconfig" KUBE_APISERVER="https://192.168.80.45:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=${KUBE_CONFIG} kubectl config set-credentials kube-proxy \ --client-certificate=/etc/kubernetes/pki/kube-proxy.pem \ --client-key=/etc/kubernetes/pki/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=${KUBE_CONFIG} kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=${KUBE_CONFIG} kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

5.6.3 开机启动 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 KUBE_PROXY_OPTS="--logtostderr=false \ --v=2 \ --proxy-mode=iptables \ --log-dir=/var/log/kubernetes \ --config=/etc/kubernetes/kube-proxy-config.yml" cat > /lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Proxy After=network.target [Service] ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl start kube-proxy systemctl status kube-proxy systemctl enable kube-proxy

5.7 集群管理 5.7.1 集群配置信息 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 kubectl config view apiVersion: v1 clusters: - cluster: certificate-authority-data: DATA+OMITTED server: https://192.168.80.45:6443 name: kubernetes contexts: - context: cluster: kubernetes user: cluster-admin name: default current-context: default kind: Config preferences: {} users: - name: cluster-admin user: client-certificate-data: REDACTED client-key-data: REDACTED

5.7.2 集群状态 1 2 3 4 5 6 7 8 kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-1 Healthy {"health" :"true" } etcd-2 Healthy {"health" :"true" } etcd-0 Healthy {"health" :"true" }

5.8 命令补全 1 2 3 4 apt install -y bash-completion source /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)echo "source <(kubectl completion bash)" >> ~/.bashrc

6. 网络插件 其中涉及的IP段,要与 kube-controller-manager中 “–cluster-cidr” 一致

6.1 CNI Plugins 所有节点都要操作

1 2 3 4 5 mkdir -p $HOME /k8s-install/network && cd $_ wget https://github.com/containernetworking/plugins/releases/download/v0.9.1/cni-plugins-linux-amd64-v0.9.1.tgz mkdir -p /opt/cni/bin tar zxvf cni-plugins-linux-amd64-v0.9.1.tgz -C /opt/cni/bin

6.2 calico Calico是一个纯三层的数据中心网络方案,是目前Kubernetes主流的网络方案。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 mkdir -p $HOME /k8s-install/network && cd $HOME /k8s-install/network wget https://docs.projectcalico.org/manifests/calico.yaml vi calico.yaml 3680 3681 3682 3683 - name: CALICO_IPV4POOL_CIDR 3684 value: "10.244.0.0/16" kubectl apply -f calico.yaml kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-7f4f5bf95d-tgklk 1/1 Running 0 2m7s calico-node-fwv5x 1/1 Running 0 2m8s calico-node-ttt2c 1/1 Running 0 2m8s calico-node-xjvjf 1/1 Running 0 2m8s kubectl get node NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 65m v1.21.4 k8s-node01 Ready node 20m v1.21.4 k8s-node02 Ready node 20m v1.21.4

6.3 flannel 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 mkdir -p $HOME /k8s-install/network && cd $HOME /k8s-install/network wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml vi kube-flannel.yml "Network" : "10.244.0.0/16" , kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE kube-flannel-ds-8qnnx 1/1 Running 0 10s kube-flannel-ds-979lc 1/1 Running 0 16m kube-flannel-ds-kgmgg 1/1 Running 0 16m kubectl get node NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 85m v1.21.4 k8s-node01 Ready node 40m v1.21.4 k8s-node02 Ready node 40m v1.21.4

6.4 ovs-cni 6.4.1 安装 open-vswitch 1 apt install openvswitch-switch

6.4.2 安装 multus multus创建的crd资源network-attachment-definitions来定义ovs配置

1 2 3 wget https://raw.githubusercontent.com/k8snetworkplumbingwg/multus-cni/master/images/multus-daemonset.yml kubectl apply -f multus-daemonset.yml

6.4.3 安装 ovs-cni 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 wget https://github.com/k8snetworkplumbingwg/ovs-cni/blob/main/manifests/ovs-cni.yml.in cat > ovs-cni.yml <<EOF apiVersion: apps/v1 kind: DaemonSet metadata: name: ovs-cni-amd64 namespace: kube-system labels: tier: node app: ovs-cni spec: selector: matchLabels: app: ovs-cni template: metadata: labels: tier: node app: ovs-cni annotations: description: OVS CNI allows users to attach their Pods/VMs to Open vSwitch bridges available on nodes spec: serviceAccountName: ovs-cni-marker hostNetwork: true nodeSelector: beta.kubernetes.io/arch: amd64 tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule initContainers: - name: ovs-cni-plugin image: quay.io/kubevirt/ovs-cni-plugin:latest command: ['cp', '/ovs', '/host/opt/cni/bin/ovs'] imagePullPolicy: IfNotPresent securityContext: privileged: true volumeMounts: - name: cnibin mountPath: /host/opt/cni/bin containers: - name: ovs-cni-marker image: quay.io/kubevirt/ovs-cni-marker:latest imagePullPolicy: IfNotPresent securityContext: privileged: true args: - -node-name - $(NODE_NAME) - -ovs-socket - /host/var/run/openvswitch/db.sock volumeMounts: - name: ovs-var-run mountPath: /host/var/run/openvswitch env: - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName volumes: - name: cnibin hostPath: path: /opt/cni/bin - name: ovs-var-run hostPath: path: /var/run/openvswitch --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: ovs-cni-marker-cr rules: - apiGroups: - "" resources: - nodes - nodes/status verbs: - get - update - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: ovs-cni-marker-crb roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ovs-cni-marker-cr subjects: - kind: ServiceAccount name: ovs-cni-marker namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: ovs-cni-marker namespace: kube-system EOF kubectl apply -f ovs-cni.yaml

6.4.4 网桥 1 2 3 4 5 6 7 8 9 10 11 12 ovs-vsctl add-br br1 ovs-vsctl show kubectl describe node k8s-master ... Capacity: ovs-cni.network.kubevirt.io/br1: 1k ... Allocatable: ovs-cni.network.kubevirt.io/br1: 1k

6.4.5 网络扩展 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cat > ovs-ipam-net.yml <<EOF apiVersion: "k8s.cni.cncf.io/v1" kind: NetworkAttachmentDefinition metadata: name: ovs-ipam-net annotations: k8s.v1.cni.cncf.io/resourceName: ovs-cni.network.kubevirt.io/br1 spec: config: '{ "cniVersion": "0.3.1", "type": "ovs", "bridge": "br1", "vlan": 100, "ipam": { "type": "static" } }' EOF kubectl apply -f ovs-ipam-net.yml

6.4.6 验证 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 cat > ovs-test.yml <<EOF apiVersion: v1 kind: Pod metadata: name: samplepod-1 annotations: k8s.v1.cni.cncf.io/networks: '[ { "name": "ovs-ipam-net", "ips": ["10.10.10.1/24"] } ]' spec: containers: - name: pod-1 command: ["sleep", "99999"] image: alpine --- apiVersion: v1 kind: Pod metadata: name: samplepod-2 annotations: k8s.v1.cni.cncf.io/networks: '[ { "name": "ovs-ipam-net", "ips": ["10.10.10.2/24"] } ]' spec: containers: - name: pod-2 command: ["sleep", "99999"] image: alpine EOF kubectl apply -f ovs-test.yml kubectl exec -it samplepod-1 -- ping 10.10.10.2 -c 5 PING 10.10.10.2 (10.10.10.2): 56 data bytes 64 bytes from 10.10.10.2: seq=0 ttl=127 time=10.266 ms 64 bytes from 10.10.10.2: seq=1 ttl=127 time=7.423 ms 64 bytes from 10.10.10.2: seq=2 ttl=127 time=7.265 ms 64 bytes from 10.10.10.2: seq=3 ttl=127 time=14.498

7. Node 节点 Kubernetes node节点组件:

6.1 克隆准备 (master节点执行) 1 2 3 4 5 6 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install tar cvf worker-node-clone.tar /usr/bin/{kubelet,kube-proxy} /lib/systemd/system/{kubelet,kube-proxy}.service /etc/kubernetes/kubelet* /etc/kubernetes/kube-proxy* /etc/kubernetes/pki /etc/kubernetes/bootstrap.kubeconfig scp worker-node-clone.tar ubuntu@192.168.80.46:/home/ubuntu scp worker-node-clone.tar ubuntu@192.168.80.47:/home/ubuntu

6.2 克隆节点 1 2 3 4 5 6 7 8 cd / && mv /home/ubuntu/worker-node-clone.tar / && tar xvf worker-node-clone.tar && rm -f worker-node-clone.tarrm -f /etc/kubernetes/kubelet.kubeconfig rm -f /etc/kubernetes/pki/kubelet* mkdir -p /var/log /kubernetes

6.3 修改配置 按实际节点名称修改

1 2 3 4 5 6 7 vi /lib/systemd/system/kubelet.service --hostname-override=k8s-node01 vi /etc/kubernetes/kube-proxy-config.yml hostnameOverride: k8s-node01

6.4 开机启动 1 2 3 4 systemctl daemon-reload systemctl start kubelet kube-proxy systemctl status kubelet kube-proxy systemctl enable kubelet kube-proxy

6.5 加入集群 (master节点执行) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-ghWG-AWFM9sxJbr5A-BIq9puVIRxfFHrQlwDjYbHba8 94m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued node-csr-r2GF_8R3zuUe9BCf6eHeijWnzyPDDy-6WQUFOrOAQjA 34s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending node-csr-wvcKDHm38jQgjyaLiA_G2ycc2Qvmecf_iRRd9IqlSEw 97s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending kubectl certificate approve node-csr-r2GF_8R3zuUe9BCf6eHeijWnzyPDDy-6WQUFOrOAQjA kubectl certificate approve node-csr-wvcKDHm38jQgjyaLiA_G2ycc2Qvmecf_iRRd9IqlSEw kubectl get node NAME STATUS ROLES AGE VERSION k8s-master1 NotReady <none> 45m v1.21.4 k8s-node01 NotReady <none> 6s v1.21.4 k8s-node02 NotReady <none> 10s v1.21.4 kubectl label node k8s-master1 node-role.kubernetes.io/master= kubectl label node k8s-node01 node-role.kubernetes.io/node= kubectl label node k8s-node02 node-role.kubernetes.io/node= kubectl get node NAME STATUS ROLES AGE VERSION k8s-master1 NotReady master 49m v1.21.4 k8s-node01 NotReady node 3m45s v1.21.4 k8s-node02 NotReady node 3m49s v1.21.4 kubectl taint nodes k8s-master1 node-role.kubernetes.io/master=:NoSchedule kubectl describe node k8s-master1 Taints: node-role.kubernetes.io/master:NoSchedule node.kubernetes.io/not-ready:NoSchedule

8. Addons 8.1 CoreDNS CoreDNS用于集群内部Service名称解析

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 mkdir -p $HOME /k8s-install/coredns && cd $HOME /k8s-install/coredns wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/deploy.sh chmod +x deploy.sh export CLUSTER_DNS_SVC_IP="10.96.0.2" export CLUSTER_DNS_DOMAIN="cluster.local" ./deploy.sh -i ${CLUSTER_DNS_SVC_IP} -d ${CLUSTER_DNS_DOMAIN} | kubectl apply -f - kubectl get pods -n kube-system | grep coredns coredns-746fcb4bc5-nts2k 1/1 Running 0 6m2s kubectl run -it --rm dns-test --image=busybox:1.28.4 /bin/sh If you don't see a command prompt, try pressing enter. / # nslookup kubernetes Server: 10.96.0.2 Address: 10.96.0.2:53 Name: kubernetes.default.svc.cluster.local Address: 10.0.0.1

DNS问题排查:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 kubectl get svc -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.96.0.2 <none> 53/UDP,53/TCP,9153/TCP 13m kubectl get endpoints kube-dns -n kube-system NAME ENDPOINTS AGE kube-dns 10.244.85.194:53,10.244.85.194:53,10.244.85.194:9153 13m CoreDNS 配置参数说明: errors: 输出错误信息到控制台。 health:CoreDNS 进行监控检测,检测地址为 http://localhost:8080/health 如果状态为不健康则让 Pod 进行重启。 ready: 全部插件已经加载完成时,将通过 endpoints 在 8081 端口返回 HTTP 状态 200。 kubernetes:CoreDNS 将根据 Kubernetes 服务和 pod 的 IP 回复 DNS 查询。 prometheus:是否开启 CoreDNS Metrics 信息接口,如果配置则开启,接口地址为 http://localhost:9153/metrics forward:任何不在Kubernetes 集群内的域名查询将被转发到预定义的解析器 (/etc/resolv.conf)。 cache:启用缓存,30 秒 TTL。 loop:检测简单的转发循环,如果找到循环则停止 CoreDNS 进程。 reload:监听 CoreDNS 配置,如果配置发生变化则重新加载配置。 loadbalance:DNS 负载均衡器,默认 round_robin。 kubectl edit configmap coredns -n kube-system apiVersion: v1 data: Corefile: | .:53 { log errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } kind: ConfigMap metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion" :"v1" ,"data" :{"Corefile" :".:53 {\n errors\n health {\n lameduck 5s\n }\n ready\n kubernetes cluster.local in-addr.arpa ip6.arpa {\n fallthrough in-addr.arpa ip6.arpa\n }\n prometheus :9153\n forward . /etc/resolv.conf {\n max_concurrent 1000\n }\n cache 30\n loop\n reload\n loadbalance\n}\n" },"kind" :"ConfigMap" ,"metadata" :{"annotations" :{},"name" :"coredns" ,"namespace" :"kube-system" }} creationTimestamp: "2021-05-13T11:57:45Z" name: coredns namespace: kube-system resourceVersion: "38460" selfLink: /api/v1/namespaces/kube-system/configmaps/coredns uid: c62a856d-1fc3-4fe9-b5f1-3ca0dbeb39c1

回滚操作:

1 2 3 4 5 6 7 8 9 wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/rollback.sh chmod +x rollback.sh export CLUSTER_DNS_SVC_IP="10.96.0.2" export CLUSTER_DNS_DOMAIN="cluster.local" ./rollback.sh -i ${CLUSTER_DNS_SVC_IP} -d ${CLUSTER_DNS_DOMAIN} | kubectl apply -f - kubectl delete --namespace=kube-system deployment coredns

8.2 Dashboard 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 mkdir -p $HOME /k8s-install/dashboard && cd $HOME /k8s-install/dashboard curl https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml -o dashboard.yaml kubectl apply -f dashboard.yaml kubectl get pods -n kubernetes-dashboard -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dashboard-metrics-scraper-79c5968bdc-xkm78 1/1 Running 0 23m 10.244.159.129 k8s-master1 <none> <none> kubernetes-dashboard-9f9799597-d8g8t 1/1 Running 0 23m 10.244.58.193 k8s-node02 <none> <none> kubectl get svc -n kubernetes-dashboard -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR dashboard-metrics-scraper ClusterIP 10.96.14.1 <none> 8000/TCP 24m k8s-app=dashboard-metrics-scraper kubernetes-dashboard ClusterIP 10.96.219.125 <none> 443/TCP 24m k8s-app=kubernetes-dashboard kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard type : ClusterIP => type : NodePort kubectl get svc -n kubernetes-dashboard -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR dashboard-metrics-scraper ClusterIP 10.96.14.1 <none> 8000/TCP 3h30m k8s-app=dashboard-metrics-scraper kubernetes-dashboard NodePort 10.96.219.125 <none> 443:31639/TCP 3h30m k8s-app=kubernetes-dashboard kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}' ) Name: dashboard-admin-token-xwd72 Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: dashboard-admin kubernetes.io/service-account.uid: 013e9f84-827f-4dc7-81b3-874a28bfebc6 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1310 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6InNQRElCQTlPRUZ5SU54STQ1QWllLXlKMTFCcmZieG0wVTJnRlpzYlBNLXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4teHdkNzIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMDEzZTlmODQtODI3Zi00ZGM3LTgxYjMtODc0YTI4YmZlYmM2Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.O-DI-0IlLFP2pDRKzQYJrZeDAnVvW1IjU-iVwGzvwID7BH0v6kXfWnti07qm8VkuGFJtpuQsmrf6v4sUeRDhr95kZlEVV8Rxnes6oixrkXdk3fR4xreh4lh6ZgCzbER6xI8pMG-j9KNjTRdY6gQPJuOThtI9ab13dpTT5AYpggA2O98DFfgcJ_DzD05hhk6TghOdoro00msHRSUrsEiH0CYa_3PiyPlkvmmY3MlJPsBTdO2pCDzcrjQ2L5EaJAvSh6OodkRY6ymOwfcbfPs3WwSocCEfwkogYOCAQhMC4NU3Jea_hoeFqzLdS1PK5R2rPT-wqemwjDKn0E6jUv6juw https://192.168.80.45:31639

9. 高可用

角色

IP

组件

备注

k8s-master1

192.168.80.45

etcd, api-server, controller-manager, scheduler, kubelet, kube-proxy, docker

k8s-node01

192.168.80.46

etcd, kubelet, kube-proxy, docker

k8s-node02

192.168.80.47

etcd, kubelet, kube-proxy, docker

k8s-master2

192.168.80.49

etcd, api-server, controller-manager, scheduler, kubelet, kube-proxy, docker

新增节点

9.1 准备操作 (Master-1) 9.1.1 kube-apiserver 证书更新 在新增节点的IP段未在证书中时需要如下操作:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 mkdir -p /root/ssl && cd /root/ssl cat > apiserver-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "127.0.0.1", "localhost", "192.168.80.1", "192.168.80.2", "192.168.80.3", "192.168.80.45", "192.168.80.46", "192.168.80.47", "192.168.80.48", "192.168.80.49", "10.96.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver cp apiserver*.pem /etc/kubernetes/pki scp apiserver*.pem ubuntu@192.168.80.46:/home/ubuntu scp apiserver*.pem ubuntu@192.168.80.47:/home/ubuntu chown root:root /home/ubuntu/apiserver*.pem mv /home/ubuntu/apiserver*.pem /etc/kubernetes/pki systemctl restart kube-apiserver systemctl status kube-apiserver

9.1.2 增加主机 在 k8s-master1, k8s-node01, k8s-node02 上制作

1 echo '192.168.80.49 k8s-master2' >> /etc/hosts

9.2 扩容 Master 9.2.1 初始化 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 hostnamectl set-hostname k8s-master2 cat >> /etc/hosts <<EOF 192.168.80.45 k8s-master1 192.168.80.46 k8s-node01 192.168.80.47 k8s-node02 192.168.80.49 k8s-master2 EOF swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system echo "nameserver 8.8.8.8" >> /etc/resolv.conf apt install ntpdate -y ntpdate ntp1.aliyun.com crontab -e */30 * * * * /usr/sbin/ntpdate-u ntp1.aliyun.com >> /var/log /ntpdate.log 2>&1 mkdir -p /var/log /kubernetes

9.2.2 克隆 1 2 3 4 5 6 7 8 9 10 11 mkdir -p $HOME /k8s-install && cd $HOME /k8s-install tar zcvf master-node-clone.tar.gz /usr/bin/kube* /lib/systemd/system/kube*.service /etc/kubernetes /root/.kube/config /usr/bin/docker* /usr/bin/runc /usr/bin/containerd* /usr/bin/ctr /etc/docker /lib/systemd/system/docker.service scp master-node-clone.tar.gz ubuntu@192.168.80.49:/home/ubuntu cd / && mv /home/ubuntu/master-node-clone.tar.gz / && tar zxvf master-node-clone.tar.gz && rm -f master-node-clone.tar.gzrm -f /etc/kubernetes/kubelet.kubeconfig rm -f /etc/kubernetes/pki/kubelet*

9.2.3 更新配置 1 2 3 4 5 6 7 8 9 10 vi /etc/kubernetes/kube-apiserver.conf --bind-address=192.168.80.49 \ --advertise-address=192.168.80.49 \ sed -i 's#k8s-master1#k8s-master2#' /etc/kubernetes/* sed -i 's#192.168.80.45:6443#192.168.80.49:6443#' /etc/kubernetes/* vi /root/.kube/config server: https://192.168.80.49:6443

9.2.4 开机启动 1 2 3 4 systemctl daemon-reload systemctl start docker kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy systemctl status docker kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy systemctl enable docker kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy

9.2.5 集群状态 1 2 3 4 5 6 7 8 kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-2 Healthy {"health" :"true" } etcd-1 Healthy {"health" :"true" } etcd-0 Healthy {"health" :"true" }

9.2.6 加入集群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 kubectl get csr NAME AGE SIGNERNAME REQUESTOR CONDITION node-csr-HfzAqSEc7sIIG9QFHip4vGFnFZhyZnYjBVGWQyGpz54 7m49s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending kubectl certificate approve node-csr-HfzAqSEc7sIIG9QFHip4vGFnFZhyZnYjBVGWQyGpz54 kubectl get node NAME STATUS ROLES AGE VERSION NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 27h v1.21.4 k8s-master2 NotReady <none> 11s v1.21.4 k8s-node01 Ready node 27h v1.21.4 k8s-node02 Ready node 27h v1.21.4

9.2.7 打标和污点 1 2 3 4 5 6 7 8 9 10 11 12 13 kubectl label node k8s-master2 node-role.kubernetes.io/master= kubectl taint nodes k8s-master2 node-role.kubernetes.io/master=:NoSchedule kubectl get nodes --show-labels NAME STATUS ROLES AGE VERSION LABELS k8s-master1 Ready master 27h v1.21.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master1,kubernetes.io/os=linux,node-role.kubernetes.io/master= k8s-master2 Ready master 2m13s v1.21.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master2,kubernetes.io/os=linux,node-role.kubernetes.io/master= k8s-node01 Ready node 27h v1.21.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01,kubernetes.io/os=linux,node-role.kubernetes.io/node= k8s-node02 Ready node 27h v1.21.4 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node02,kubernetes.io/os=linux,node-role.kubernetes.io/node=

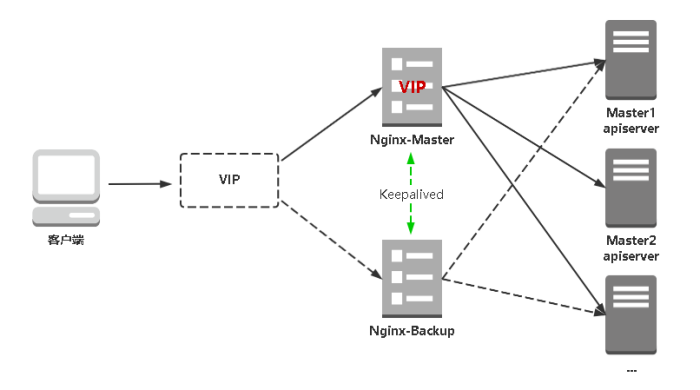

9.3 高可用负载均衡

Nginx: 主流Web服务和反向代理服务器,这里用四层实现对apiserver实现负载均衡。

Keepalived: 主流高可用软件,基于VIP绑定实现服务器双机热备。Keepalived主要根据Nginx运行状态判断是否需要故障转移(漂移VIP),例如当Nginx主节点挂掉,VIP会自动绑定在Nginx备节点,从而保证VIP一直可用,实现Nginx高可用。

服务器规划:

角色 IP 组件

k8s-master1

192.168.80.45

kube-apiserver

k8s-master2

192.168.80.49

kube-apiserver

k8s-loadbalancer1

192.168.80.2

nginx, keepalived

k8s-loadbalancer2

192.168.80.3

nginx, keepalived

VIP

192.168.80.1

虚拟IP

9.3.1 安装软件 1 apt install nginx keepalived -y

9.3.2 配置Nginx 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 cat > /etc/nginx/nginx.conf << "EOF" user nginx; worker_processes auto; error_log /var/log /nginx/error.log; pid /run/nginx.pid; include /usr/share/nginx/modules/*.conf; events { worker_connections 1024; } stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent' ; access_log /var/log /nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.80.45:6443; server 192.168.80.49:6443; } server { listen 16443; proxy_pass k8s-apiserver; } } http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"' ; access_log /var/log /nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; server { listen 80 default_server; server_name _; location / { } } } EOF

9.3.3 keepalived 配置 (master) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 cat > /etc/keepalived/keepalived.conf << EOF global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } # 检查脚本 vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER interface ens33 # 修改为实际网卡名 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 192.168.80.1/24 } track_script { check_nginx } } EOF

9.3.4 keepalived 配置 (slave) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 cat > /etc/keepalived/keepalived.conf << EOF global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_BACKUP } # 检查脚本 vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state BACKUP interface ens33 # 修改为实际网卡名 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 90 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 192.168.80.1/24 } track_script { check_nginx } } EOF

9.3.5 keepalived 检查脚本 1 2 3 4 5 6 7 8 9 10 11 12 cat > /etc/keepalived/check_nginx.sh << "EOF" count=$(ss -antp |grep 16443 |egrep -cv "grep|$$" ) if [ "$count " -eq 0 ];then exit 1 else exit 0 fi EOF chmod +x /etc/keepalived/check_nginx.sh

9.3.6 启动服务 1 2 3 systemctl daemon-reload systemctl start nginx keepalived systemctl enable nginx keepalived

9.3.7 状态检查 1 2 3 4 5 6 7 8 9 10 11 12 13 14 ip addr curl -k https://192.168.80.1:16443/version { "major" : "1" , "minor" : "19" , "gitVersion" : "v1.21.4" , "gitCommit" : "c6a2f08fc4378c5381dd948d9ad9d1080e3e6b33" , "gitTreeState" : "clean" , "buildDate" : "2021-05-12T12:19:22Z" , "goVersion" : "go1.15.12" , "compiler" : "gc" , "platform" : "linux/amd64" }

9.3.8 Worker Node 连接到 LB VIP 1 2 3 4 5 6 7 8 9 sed -i 's#192.168.80.45:6443#192.168.80.1:16443#' /etc/kubernetes/* systemctl restart kubelet kube-proxy kubectl get node NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 3d17h v1.21.4 k8s-master2 Ready master 2d16h v1.21.4 k8s-node01 Ready node 3d15h v1.21.4 k8s-node02 Ready node 3d15h v1.21.4

10. 删除节点 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 systemctl stop kubelet kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 40h v1.21.4 k8s-master2 NotReady master 12h v1.21.4 k8s-node01 Ready node 40h v1.21.4 k8s-node02 Ready node 40h v1.21.4 kubectl drain k8s-master2 node/k8s-master2 cordoned error: unable to drain node "k8s-master2" , aborting command ... There are pending nodes to be drained: k8s-master2 error: cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): kube-system/calico-node-lwj2r kubectl drain k8s-master2 --ignore-daemonsets node/k8s-master2 already cordoned WARNING: ignoring DaemonSet-managed Pods: kube-system/calico-node-lwj2r node/k8s-master2 drained kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 40h v1.21.4 k8s-master2 Ready,SchedulingDisabled master 12h v1.21.4 k8s-node01 Ready node 39h v1.21.4 k8s-node02 Ready node 39h v1.21.4 kubectl uncordon k8s-master2 node/k8s-master2 uncordoned kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 40h v1.21.4 k8s-master2 Ready master 12h v1.21.4 k8s-node01 Ready node 39h v1.21.4 k8s-node02 Ready node 39h v1.21.4 kubectl delete node k8s-master2 kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master1 Ready master 41h v1.21.4 k8s-node01 Ready node 40h v1.21.4 k8s-node02 Ready node 40h v1.21.4

Z. 补充 组件日志级别 1 2 3 4 5 6 7 8 --v=0 Generally useful for this to ALWAYS be visible to an operator. --v=1 A reasonable default log level if you don’t want verbosity. --v=2 Useful steady state information about the service and important log messages that may correlate to significant changes in the system. This is the recommended default log level for most systems. --v=3 Extended information about changes. --v=4 Debug level verbosity. --v=6 Display requested resources. --v=7 Display HTTP request headers. --v=8 Display HTTP request contents